86% of businesses plan to unify their data in one place, but sheer volume and variety present many challenges. In fact, 51% report having 20 to 100 or more disparate data sources. Effective data management requires a centralized repository. Data lakes and data warehouses are two common solutions to achieving this. However, a thin line blurs the distinction between these models. Both make it easier for organizations to manage their data assets, yet they differ in structure, purpose, and functionality. So…data lake vs data warehouse?

Organizations no longer have to pick one over the other. Thanks to the rise of the data lakehouse model—a hybrid solution that combines the benefits of data lakes and data warehouses.

Data Lake vs Data Warehouse: Table of Contents

Data Warehouse vs. Data Lake: An In-Depth Comparison

These solutions share a common goal: data storage and management. Understanding the distinctions between the two can help organizations choose the right approach, or even a combination of both, to optimize their data strategies.

What is a Data Warehouse?

Data warehouses store, organize, and analyze a large amount of historical data to support business reporting and analytics. In this data management system, data analysts consolidate data from multiple sources and model it in a structured format for end-users. This process is called data modeling.

Data modeling—the data warehouse’s blueprint—uses a predefined schema that outlines relationships and hierarchies within the data. Through a logical framework, it minimizes the risk of data inconsistencies and facilitates efficient data retrieval.

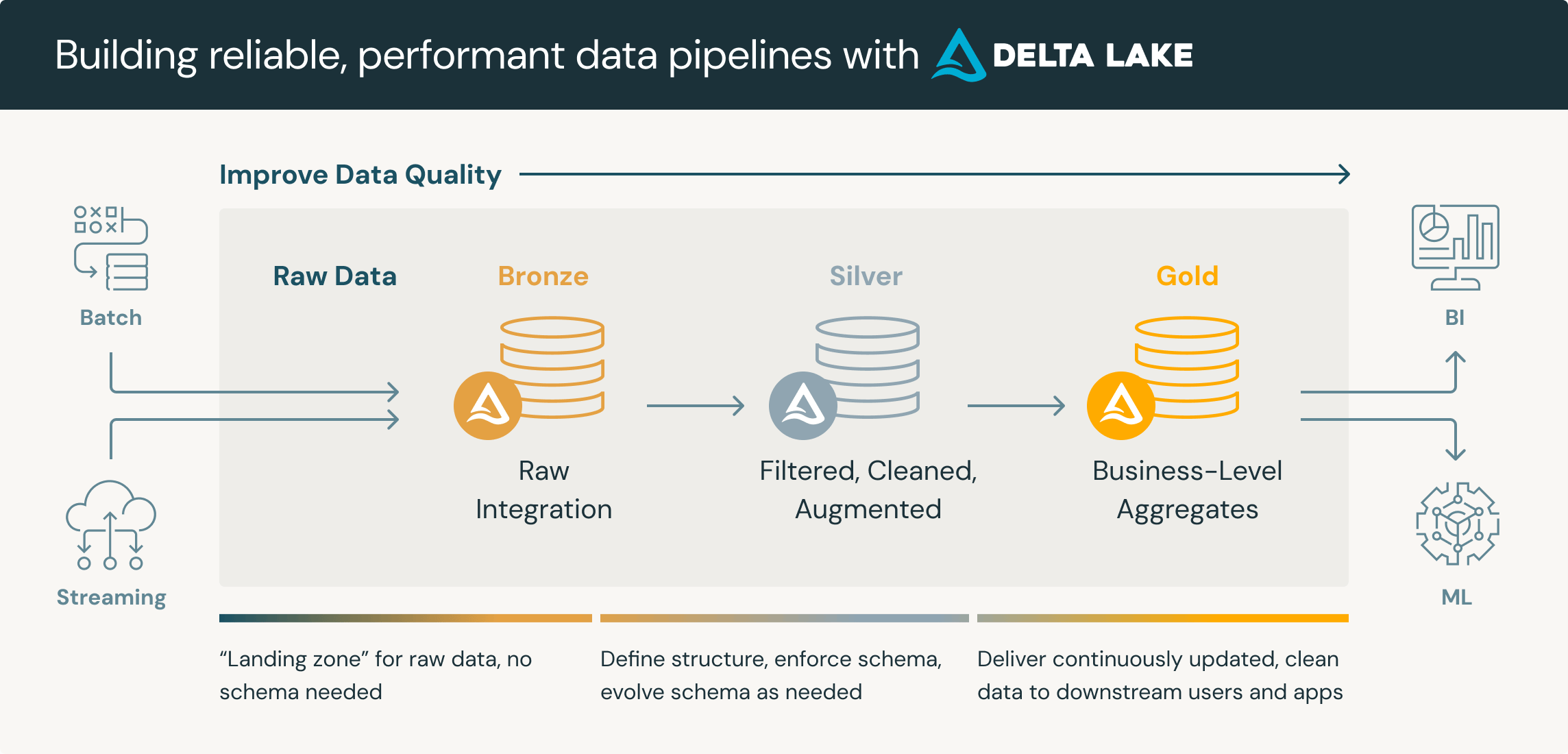

Data analysts may implement a medallion architecture with multiple levels of tables to reflect the degree of data enrichment or cleansing.

To illustrate this, consider a data warehouse designed for a retail business.

- Bronze Layer. Users ingest raw, structured data from the retailer’s POS sales transactions and inventory records. This layer captures data in its original format—thus, serving a historical archive and enabling quick access to comprehensive data lineage.

- Silver Layer. Data from the bronze layer undergoes cleansing and merging. Data analysts standardize records (e.g., customer names, date formats, product codes), eliminate duplicate entries, and consolidate information.

- Gold Layer. The layer organizes curated data into project-specific tables. E.g.: separate tables for customer behavior analysis, sales performance by category, and inventory levels

With highly organized and labeled data, retrieval and analysis based on specific attributes become straightforward. Business analysts and marketing and sales teams rely on this to analyze verified data for efficient strategic planning.

What is a Data Lake?

Data lakes are a centralized repository that stores and manages structured, semi-structured, and unstructured data. Unlike data warehouses, which require rigid schema before data ingestion, data lakes can hold data in its raw form. This reduces the need for upfront data modeling, saving time and resources.

The retailer in the previous example can gain insights not only from sales transactions and inventory levels. They can also store semi-structured data from website interaction logs and unstructured data like customer surveys, product reviews, and product images.

This schema-on-read functionality enables data power users (i.e., data scientists and engineers) to define structure only when accessing data. It’s more scalable for advanced use cases since users can quickly adapt to new data sources and formats. They can incorporate real-time data, test hypotheses, and support evolving analytics needs. However, data lakes present challenges in accessibility and usability. Non-technical, line-of-business teams need structured, reliable data that’s easy to access and interpret without extensive tech support.

A brief outline of the data lake vs. data warehouse discussion:

|

Data Warehouse |

Data Lake |

|

|

Data Type |

Structured data |

Structured, semi-structured, and unstructured data |

|

Schema |

Schema-on-write (predefined schema before data ingestion) |

Schema-on-read (structure defined when accessing data) |

|

Data Processing |

Requires data modeling and cleansing upfront |

Stores raw data, with minimal pre-processing |

|

Accessibility |

Easily accessible for non-technical, line-of-business users |

Primarily accessible to data power users (data scientists, engineers) |

|

Use Cases |

Business reporting, analytics, and operational decision-making |

Advanced analytics, machine learning, and big data processing |

|

Scalability |

Limited scalability for unstructured data |

Highly scalable, suitable for handling large volumes of diverse data |

|

Cost and Storage |

More costly due to structured storage and processing |

More cost-effective for large, diverse data sets |

|

Typical Users |

Business analysts, sales and marketing teams |

Data scientists, engineers, advanced analytics teams |

|

Key Limitation |

Rigid structure may limit flexibility for evolving analytics |

Can be complex and challenging for non-technical access |

The Rise of the Data Lakehouse: Bridging the Gap Between Flexibility and Structure

Businesses transitioned from cloud data warehouses (42%), enterprise data warehouses (35%), and data lakes (22%) to a data lakehouse architecture. The primary reasons: cost efficiency and ease of use.

A data lakehouse combines a data lake’s schema flexibility with a traditional data warehouse’s data management and governance features. It allows all types of data—structured, semi-structured, and unstructured—to reside in a single platform.

In terms of processing data, users can capture raw data in its native format and later define its structure as needed. Thus, it eliminates the need for multiple versions and minimizes the risk of data silos.

How the Lakehouse Architecture by Databricks Future-Proof Data Strategy

Databricks, a data intelligence platform built on the data lakehouse architecture, features robust capabilities to manage and store enterprise data. It facilitates the analysis of large datasets, allowing data scientists to train models, validate results, and deploy solutions quickly.

Moreover, Databricks equips organizations with business intelligence support, as well as reports and dashboards for analytical output. These facilitate the development and deployment of advanced AI and machine learning models.

With advanced analytical tools, data scientists can explore relationships within the data and discover patterns to inform strategic decisions.

Example

AT&T, a communication service provider, adopted Databricks to overcome the limitations of its legacy on-premise data lake.

The platform’s end-to-end streaming capabilities allowed AT&T to ingest and standardize large volumes of structured and unstructured data from multiple systems. They then built ML models that deliver alerts and recommendations for employees across the organization. This transition resulted in an 80% reduction in fraud attacks that would’ve otherwise cost AT&T millions of dollars.

Moreover, for department-specific or use case-specific data needs, Databricks features Data Mart capabilities that create tailored data environments. It provides curated datasets for different business departments, so teams have easy access to the data they require.

Databricks lets businesses leverage their data’s full spectrum to support informed decision-making and strategic initiatives.

7 Best Practices for Implementing a Data Lakehouse

The following section outlines practical steps for optimizing the use of data intelligence platforms like Databricks.

- Define objectives. Outline the goals and use cases for your data lakehouse. Objectives can help determine whether you need structured (e.g., from databases) or unstructured data (e.g., social media posts, customer reviews).

- Set up infrastructure. Assess your organization’s workload requirements to determine the appropriate computing resources. Then, select cloud providers like AWS, Azure, or GCP to configure networking, security, and access controls.

- Automate data ingestion. Build pipelines to collect batch and streaming data from multiple sources. For better quality control, implement a layered architecture that separates raw (bronze), cleansed (silver), and business-specific data (gold).

- Optimize data storage. Adopting scalable storage solutions like Delta Lake enhances data integrity by supporting ACID (Atomicity, Consistency, Isolation, Durability) transactions. Implement data partitioning and indexing to further optimize performance.

- Streamline data transformation. Develop ETL pipelines to automate data cleaning, transformation, and loading. Tools like Apache Spark can help distribute tasks across multiple nodes for near real-time, large-scale data processing. Orchestration tools then sequence these Spark jobs to complete ETL steps on schedule.

- Enforce data governance and quality. Apply frameworks like Unity Catalog for access controls, data usage tracking, and compliance across datasets. Moreover, conduct quality checks to identify issues like missing values or inconsistencies early in the data pipeline.

- Enable analytics and machine learning. Set up tools like Databricks SQL to allow interactive querying for non-tech users and enable shareable dashboards across teams.

ML frameworks like MLflow, on the other hand, can streamline the machine learning lifecycle. Data scientists can focus more on developing models rather than managing them.

A Hybrid Strategy

Data lakes and data warehouses are essential for a robust data management strategy. As for it, a data lakehouse merges the two, holding structured and unstructured data, for better data quality, data storage, and analytics capabilities. Databricks aptly puts it, “The best data warehouse is a lakehouse.” By unifying features, a lakehouse provides the best of both worlds.

Maximize Your Databricks SQL Investment

Derive greater value from your Databricks SQL investment with Infoverity’s data governance, data quality checks, migration support, and other robust functionalities.

The platform can help automate the transition of your on-premise assets to the cloud without risks and downtime. Contact us to learn more.

FAQ – Data lake vs Data warehouse

What is the main difference between a data lake and a data warehouse?

A data lake stores raw, unstructured, and semi-structured data, providing flexibility for advanced analytics and machine learning workflows. It uses a schema-on-read approach, defining structure only upon retrieval. A data warehouse, on the other hand, organizes structured data with schema-on-write requirements before ingestion, ensuring easily accessible and refined data for business reporting and analytics. Both serve unique purposes based on the needs of users—data lakes for scalability and experimentation, warehouses for precision and clarity in reporting.

Why are data lakehouses gaining popularity over traditional architectures?

Data lakehouses combine the best features of data lakes and data warehouses, offering flexibility for raw data storage along with the governance and organization benefits of a warehouse. This hybrid solution enables organizations to store structured, semi-structured, and unstructured data in one platform, minimizing data silos and reducing reliance on maintaining separate systems. By leveraging platforms like Databricks, businesses can unify their data strategies to support scalable machine learning models, while ensuring streamlined access for analytics and decision-making.

What challenges do businesses face in transitioning to a data lakehouse?

Transitioning to a data lakehouse may require significant changes in infrastructure and workflows. Common challenges include adapting legacy systems, automating data ingestion pipelines, enforcing governance frameworks to manage compliance, and ensuring data quality across structured and unstructured sources. Selecting the right cloud provider and implementing scalable storage solutions, such as Delta Lake, is essential to mitigate these challenges while optimizing resources for future growth.

How can organizations maximize their investment in Databricks and data lakehouses?

Organizations can optimize their data lakehouse strategy by defining clear goals, automating data pipelines for batch and real-time data ingestion, and adopting frameworks like Unity Catalog for governance and compliance. By leveraging Databricks SQL, business teams can access curated dashboards for interactive querying, while machine learning workflows can be streamlined through tools like MLflow. Infoverity’s customized support with migration, governance, and quality checks ensures seamless implementation to realize the full potential of Databricks and lakehouse architectures.

Download The Guide

Looking for a guide to deploy non-invasive data governance across your organization?

Download our free white paper and discover how to implement data governance seamlessly at your company.